Most Code is Just Cache

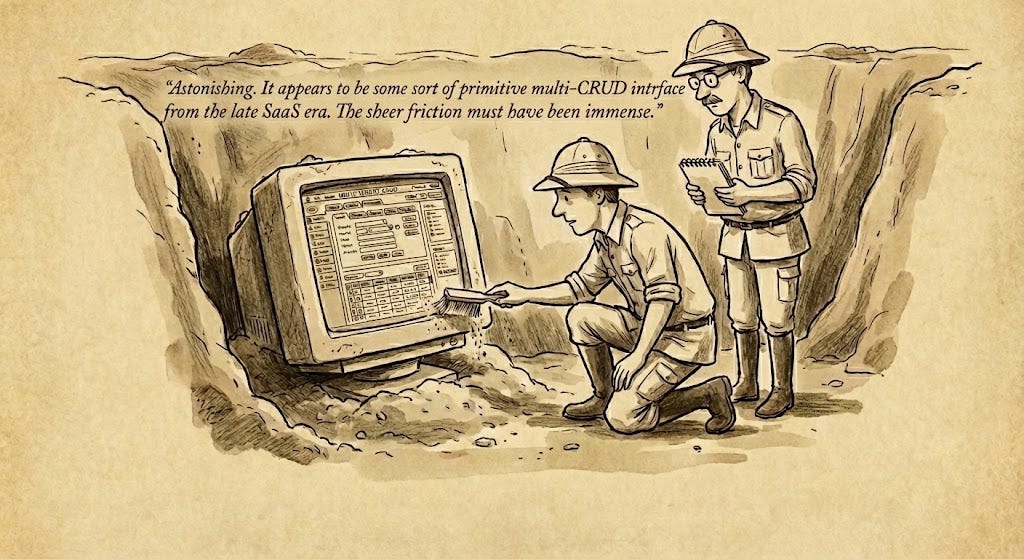

As personal apps become trivial, we should stop thinking of code the same way.

Claude Code has systematically begun to consume many of the SaaS apps I used to (or plan to) pay for.

Why pay a subscription when I can "vibe code" a personal MVP in twenty minutes? I don’t worry about maintenance or vendor lock-in because, frankly, the code is disposable. If I need a new feature tomorrow, I don’t refactor—I just rebuild it.1

Code is becoming just an ephemeral cache of my intent.

In this model, the ‘Source Code’ is the prompt and the context; the actual Python or Javascript that executes is just the binary. We still run the code because it’s thermodynamically efficient and deterministic, but we treat it as disposable. If the behavior needs to change, we don’t refactor the binary; we re-compile the intent.

This shift has made me intolerant of static interfaces. I have stopped caring about software that doesn’t let me dump massive amounts of context into Gemini or Claude to just do the thing. If a product forces me to click buttons to execute a process that an LLM could intuit from a prompt, that product is already legacy.

It forces us to question the permanence of the current model. We often make the mistake of assuming software—as we know it today—is a permanent fixture of human productivity. But if you zoom out, the era of SaaS is a blink of an eye in modern history. It is easy to overestimate how core it is to the future.

In this post, I want to extrapolate these thoughts a bit and write out what could be the final stages of software.

Software Evolution

The stages here might not necessarily be chronological or mutually exclusive. Instead, they are ordered from static to dynamic code generation — where more and more the intent of a customer is the software they use.

Stage 1. Traditional SaaS

This is the baseline where software is a static artifact sold as a service, built on the assumption that user problems are repetitive and predictable enough to be solved by rigid workflows. To the consumer, this looks like dashboards, CRUD forms, and hardcoded automations. The intelligence here is sourced mainly from the SaaS founder and hired domain experts, hard-coded into business logic years before the user ever logs in.

When: We recognized that distributing software via the cloud was more efficient than on-premise installations.

Value Loop: Customer Problem → Product Manager writes PRD → Engineers write Static Code → Deploy → Customer adapts their workflow to the tool. (Time: Months to Years | Fit: Generic / One-size-fits-none)

Stage 2. FDEs and AI Builders

We are seeing this now with companies adopting the Forward Deployed Engineering (FDE). In this stage, the SaaS company hires humans to manually use AI to build bespoke solutions for the client. For the consumer, this feels like a concierge service; they don’t get a login to a generic tool, they get a custom-built outcome delivered by a human who used AI to write the glue code. The intelligence is hybrid: the human provides the architecture, the AI writes the implementation code in weeks to days.

When: Companies realize AI allows their employees to build custom apps for clients faster than the clients can learn or adapt a generic tool.

Value Loop: Customer Problem → SaaS Employee (FDE) Prompts AI → AI generates Custom Script/App → Employee Deploys for Customer. (Time: Days | Fit: High / Tailored to specific customer edge cases)

Stage 3. AI Features (Product is Platform/Interface)

This is the current “safe space” for most tech companies, where they bolt an LLM onto an existing application to handle unstructured data. Consumers experience this as a “Draft Email” button in their CRM or a “Chat” sidebar in their UI—the platform is still the main product, but AI is a feature that (hopefully) reduces friction and/or provides some extra functionality customization2. The intelligence comes from a constrained model of product design and LLM scaffolding, providing content within a structure still strictly dictated by the SaaS platform’s code.

When: People start to see AI is good at summarizing, generating content, or taking actions within existing workflows.

Value Loop: Customer Problem → Static SaaS Interface AI Feature Text Box → Stochastic Result → Human Review. (Time: Minutes | Fit: Medium / Constrained by the platform’s UI)

Stage 4. AI Products (Product is Data/Context)

This is the tipping point where the software interface starts to disappear because the “interface” was just a way to collect context that the model can now ingest directly. Consumers move to a “Do this for me” interface where intent maps directly to an outcome rather than a button click, often realized as an agent calling a database or MCP servers3. The intelligence is the model and it’s engineered input context, relegating the SaaS role to in some sense providing clean proprietary data via an agent friendly interface. Software as a Service for Agents.

When: People start to see AI is good at orchestrating complex decisions and using tools—across SaaS platforms—autonomously.

Value Loop: Customer Problem (Prompt as ~PRD) → Runtime Code Generation → Dynamic Outcome. (Time: Real-time | Fit: Very High / Dynamically generated for the specific context)

Critically, this doesn't mean the LLM acts as the CPU for every single user interaction (which would be latency-poor and energy-inefficient). Instead, the model almost acts as a Just-In-Time compiler. It generates the necessary code to execute the user’s intent, runs that code for the session, and then potentially discards it

Stage 5. AI Training Environments

This is the end game in some cases. If code is just a cache for intent, eventually we bypass the cache and bake the intuition directly into the model. To the consumer, the “tool” is invisible; the expert system simply exists and provides answers or actions without a login or workflow. The intelligence is in the model itself; the software platform exists solely as a distillation mechanism—a gym to train the vertical AI—and once the model learns the domain, the software is no longer needed. A company in this stage is not really even SaaS anymore, maybe more so a AI-gyms-aaS company.

When: People start to see AI is good at absorbing the entire vertical’s intuition.

Value Loop: Raw Domain Data → Reinforcement Learning / Fine-Tuning → Model Weights. (Time: Instant / Pre-computed | Fit: Very High / Intuitive domain mastery)

This might feel unintuitive as a stage — like how could you bake some proprietary data lake into a model? How can our juicy data not be the moat? My conclusion is that most (but not all) data is a transformation of rawer upstream inputs and that these transformation (data pipelines, cross-tenant analysis, human research, etc.) are all “cache” that can be distilled into a more general model that operates on its intuition and upstream platform inputs.

Rebuttals

“But can agents run a bank?” Reliability and safety comes down to distinguishing between guardrails (deterministic interfaces and scaffolding) and runtime execution (LLM code). For now, you don’t let the LLM invent the concept of a transaction ledger or rewrite the core banking loop on the fly. In XX years, maybe we do trust AI to write core transaction logic after all fail-able humans wrote the code for most mission critical software that exists today. The line between human-defined determinism and agent symbolic interfaces will gradually move of time.

“But enterprise SaaS is actually super complex.” Yes, but that complexity is mostly just unresolved ambiguity. Your “deep enterprise understanding” is often a collection of thousands of edge cases—permissions, policy exceptions, region-specific rules—that humans had to manually hard-code into IF/ELSE statements over a decade. Distilled to the core, this complexity collapses. The model doesn’t need 500 hard-coded features; it needs the raw data and the intent. An app built for one can also make a lot of simplifications compared to one that acts as a platform.

“Customers don’t want to prompt features.” I agree. I don’t think the future looks like a chatbot. “Chat” is a skeuomorphic bridge we use because we haven’t figured out the consistent native interface yet. It might be a UI that pre-emptively changes based on your role, or it might feel like hiring a really competent employee who just “takes care of it” without you needing to specify the how. Or, as we see in Stage 2, the user never prompts at all—an FDE does it for them, and the user just gets a bespoke app that works perfectly.

Is SaaS Dead?

Stage 1, where most companies are stuck today, definitely is.

Why? Because the sheer overhead of traditional SaaS—the learning curve, the rigid workflows, the "click tax" to get work done—is becoming unacceptable in a world where intent can be executed directly. It feels increasingly archaic when flexible solutions can be generated on demand.

The value is moving away from the workflow logic itself and toward two specific layers that sandwich it:

The Data Layer: Proprietary data, trust, and the “agentic scaffolding” that allows models to act safely within your domain.

The Presentation Layer: Brand and UI. While I suspect trying to control the presentation layer long-term is futile (as users will eventually bring their own “interface agents” to interact with your data), for now, it remains a differentiator.

We are going to see companies move through these tiers. The winners IMO will be the ones who realize that the "Service" part of SaaS is being replaced by model intelligence. The SaaS that remains will be the infrastructure of truth and the engine of agency.

Conclusion

We are transitioning from a world of static artifacts (code that persists for years) to dynamic generations (code that exists for milliseconds or for a single answer).

Of course, I could be wrong. Maybe AI capability plateaus before it can fully integrate into complex verticals. Maybe traditional SaaS holds the line at Stage 2 or 3, protecting its moat through sheer inertia. Maybe the world ends up more decentralized.

Some of my open questions:

Which stage should you work on today? Is there alpha in skipping straight to Stage 4, or do you need to build the Stage 2 “vibe coding” service to bootstrap for now?

What are the interfaces of the future? Is it MCP, curated compute sandboxes, or a yet-to-be-defined agent-to-agent-to-human protocol? What interface wins out or does each company or consumer bring their own agentic worker?

How fast does this happen? Are we looking at a multi-decade-long transition, or do companies today rapidly start dropping lower stage SaaS tools?

Does AI have a similar impact beyond software? does medicine move from “static protocols” to “on-demand, patient-specific treatments”?

Even more so than me you can see Geoffrey Huntley’s ralph-powered rampage of GitHub and many other tools.

Similar to Karpathy’s “LLMs not as a chatbot, but the kernel process of a new Operating System” (2023)

"The Presentation Layer: Brand and UI. While I suspect trying to control the presentation layer long-term is futile (as users will eventually bring their own “interface agents” to interact with your data), for now, it remains a differentiator."

Isn't the point of the UI to remove the mental load from the user of having to deeply understand the business case?

I'm much more efficient with CLIs than UI's, but that's only because I deeply understand operating systems. Learning new CLI's is hard because it's learning a whole new set of commands and concepts. The point of a static UI is that I'm able to learn incrementally.

I like the idea of dynamic UI's but only for things that I know very well, and I'm technically minded. The idea of having to work with new systems with a "customized UI" seems counter-productive. I want a static UI someone else has experience with. This idea of "the UI adapts the data to you" always seemed weird to me because like, I'm not nearly smart enough for that. I hate when Apple changes the color of an iPhone button, imagine every UI I work with being different every time I try to use it.

Also, doesn't this mean I'm constantly a beginner and never get good at my tools? Reliable UI's allow me to turn my brain off. Does everyone else in the world secretly think that they wish things changed more, and I'm the only one too dumb to want this?

> we haven’t figured out the consistent native interface yet.

This line is doing a lot of heavy lifting. Curious to hear you say more about this. Are you looking towards Ink & Switch, or Cursor, or from creatives like Bret Victor...? Are you willing to try novel interfaces, or is there more weight on the "consistent" aspect?