How to Stop Your Human From Hallucinating

When humans sometimes act like confused language models.

We talk a lot about AI "hallucinations"1 – when Large Language Models (LLMs) confidently state falsehoods or make things up. As these models become more and more integrated into our daily workflows, there ends up being three types of people:

Those who can’t use AI non-trivially without a debilitating amount of "hallucinations". They know AI makes them less productive.

Those who use AI for most things without realizing how much they are blindly trusting its inaccuracies. They don’t realize when AI makes them less productive or how to cope with inconsistency.

Those who use AI for most things but have redirected more time and effort into context communication and review. They understand how to cope with limitations while still being able to leaning on AI consistently (see Working with Systems Smarter Than You).

More recently I’ve been reflecting on parallels between these archetypes and human systems (e.g. managers managing people ~ people managing AI assistants). Originally, I was thinking through how human organization can influence multi-agent system design, but also how LLM-based agent design can improve human organization and processes. While being cautious with my anthropomorphizing, I can’t help but think that types 1 and 2 could be more successful if they considered an LLM’s flaws more similar to human ones.

In this post, I wanted to give some concrete examples of where human systems can go wrong in the same ways LLMs "hallucinate" and how this informs better human+AI system design.

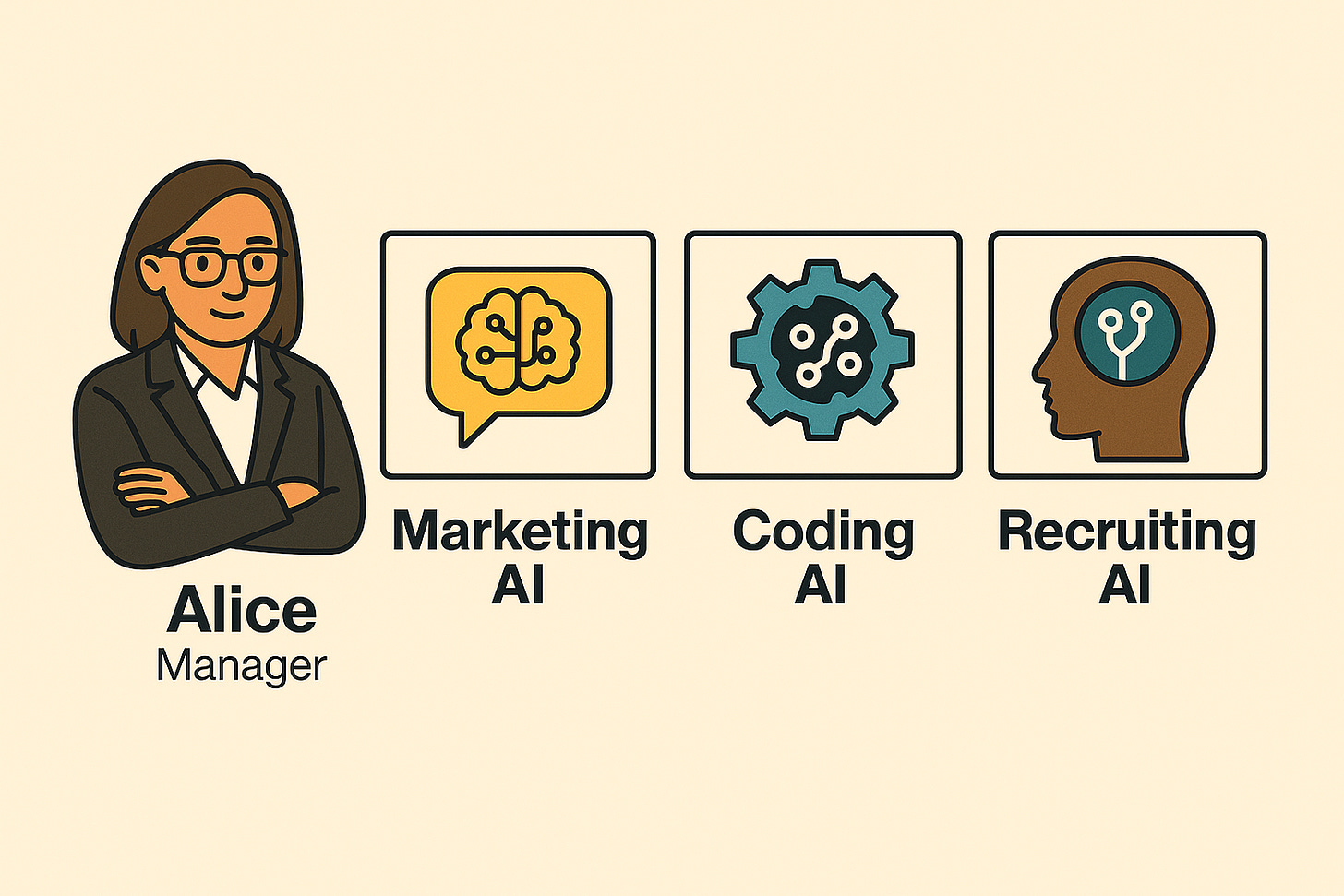

Meet Alice & Team

Meet Alice, a hypothetical manager at a small high growth social media company. As a systems thinker, Alice believes good processes beat heroics. She can't keep up with her workload doing everything herself, so when finance approves budget for three new workers, she leaps at the chance to build a scalable "people system".

After several rounds of interviews, she hires her team:

Bob – Marketing analyst, hired to build growth forecasts.

Charlie – Software engineer, hired to untangle legacy auth.

Dave – Recruiter, tasked with doubling team size by EOY.

All three are undeniably smart. All three will “hallucinate” in spectacularly different ways2. When they do Alice doesn’t ask them to redo, re-hire, or do the work herself but instead redesigns each of their processes to make them and their future teams more effective.

Case 1: Bob’s Phantom Growth Curve

Monday 09:10 AM — someone drops a Slack:

“Please prep a 2024 organic-growth forecast for QBR.

• Use the most recent 30-day data

• Break it down by channel

• Include the Activation segment we track for PLG”

Bob, two weeks in, types “organic growth” into Looker. Nineteen dashboards appear; the top hit is ga_sessions_all—huge row count, nightly refresh, so it looks authoritative.

Halfway through, they add:

“Need a quarter-over-quarter 2023 comparison so we can show the delta.”

Those tables don’t exist for the new server-side pipeline, so Bob sticks with Google Analytics (GA) and splices in a 2023 view an intern built for hack-week.

Slides ship at 6 PM: “Activation Drives 17% MoM Organic Lift.”

At Tuesday’s rehearsal: Product asks which “Activation” he used. Bob blinks—there’s only one to him. Ten minutes later everyone realises the entire forecast rides on the wrong metric, the wrong source, and a one-off intern table.

What went wrong:

Constraint collision: “Last 30 days” and “QoQ 2023” forced him to choose a dataset that satisfied only one request.

No signal hierarchy: An intern’s hack-week table looked as “official” as the curated view.

Jargon clash: “Activation” is generic marketing slang, but internally it marks users who complete an onboarding quiz.

Hidden documentation: The correct dataset lived four folders deep; search indexing buried it.

Outdated pipeline: GA misses 50% of traffic now captured server-side; Bob never knew.

How Alice adjusted:

Surface-the-canon: Dashboards and tables now carry a Source-of-Truth badge and float to the top of search; deprecated assets auto-label DEPRECATED.

Constraint-aware dashboards: Every canonical view lists guaranteed fields, supported time ranges, and shows a red banner, “QoQ view not available”, if a request exceeds its scope. Analysts can’t export mismatched slices without reading this warning.

Language safety-net: A mandatory onboarding docs provides the company-specific meaning, owner, and freshness for terms like Activation, killing jargon drift.

Bob experienced a “bad inputs hallucination”. Alice addressed these by cleaning up and refining context.

Case 2: Charlie’s One-Line Patch that Broke Mobile

Wednesday 11:42 AM, PagerDuty flares: sporadic race-condition errors in the auth service. Alice Slacks Charlie, the new engineer:

“Mobile log-ins are spiking. Can you hot-patch the mutex logic before the 1 PM exec review?”

Charlie opens AuthHandler.java, wraps refreshToken() in a synchronized block, and runs unit tests—green across the board. Jira still blocks merge until he fills ten mandatory fields (impact score, rollout plan, risk level). He copies placeholder text from yesterday’s ticket, hits Merge, and grabs a coffee.

Twelve minutes after deploy, Android log-ins leap to 500 ms. Mobile clients call refreshToken() twice, deadlocking on Charlie’s new lock. Rollback ensues.

What went wrong:

Time-crunch override: “Patch before exec review” compressed the thinking window to near zero.

Field-first autopilot: Jira’s ten required fields were completed before Charlie articulated his approach, so the ticket captured no real reasoning.

No plan: He typed code without first jotting ideas & alternatives, leaving assumptions unexamined.

Shallow review: the tiny three-line PR was rubber-stamped—reviewer glanced at syntax but had no checklist for concurrency side-effects, so the deadlock risk slid by.

How Alice adjusted:

Design first: Certain prod changes starts with a half-page change-doc (intent, alternatives, blast radius). The ticket fields auto-populate from this draft, explanation precedes form-filling.

Self-validation ritual: Draft must list at least one alternative approach and one failure case; author checks both before coding and secondary reviews.

Encourage exploration: Engineers block the first few minutes of a fix to free-write sketches, no format, just possibilities. Rough notes are reviewed in a same-day sync so risky branches surface before any code is written.

Charlie experienced a “constrained thought hallucination”. Alice addressed these by creating space and checkpoints for when solving complex problems.

Case 3: Dave’s Pipeline to Nowhere

Thursday 09:30 AM — Budget finally lands to grow the team. Alice fires off a quick Slack to Dave, the new recruiter:

“Goal: fill every open role ASAP. First slate in two weeks—use the JD we sent out for last year’s Staff Backend hire as a reference.”

Dave dives in, copies the old job post, tweaks a few lines, and launches a LinkedIn blitz: 500 InMails, 40 screens booked. Two weeks later he delivers a spreadsheet titled “Backend Slate”, 30 senior engineers, half require relocation, none match the targets Finance just announced, and exactly zero are data scientists (the role Product cares about most).

Engineering leads groan; PMs are confused; Finance is furious that relocation wasn’t budgeted. Dave is equally baffled: he did what the Slack said.

What went wrong:

Blurry objective: “Fill every open role” masked eight unique positions—backend, data science, ML Ops, and two internships.

Example overfitting: Dave treated last year’s Staff Backend JD as the canonical spec; every search term, filter, and boolean string anchored there.

Missing Do/Don’t list: No “Supported vs Not Supported” notes on level, location, visa status, or diversity goals.

Collaboration gap: Dave had no interface map—he didn’t know Product owns data-science roles or that Finance owns relocation budgets.

Hidden assumptions: “Remote-friendly” means “within U.S. time zones” internally, but Dave took it literally and sourced from 13 countries.

Zero acceptance criteria: Spreadsheet columns didn’t match ATS import; hiring managers couldn’t even load the data.

No back-out clause: When goals changed mid-search, Dave had no explicit stop-and-clarify trigger, so he just kept sourcing.

How Alice adjusted:

Scope charter: A one-page Role-Intake doc for every search—lists Do / Don’t, Supported / Not Supported, critical assumptions, and an “If unknown, ask X” field.

Collaboration map & back-out clause: Doc names the decision-owner for comp, diversity, tech stack, and visa. Any conflicting info triggers a mandatory pause in the Slack channel #scope-check.

Definition of done: Each role ships with an acceptance checklist (level, location, diversity target, salary band) and an ATS-ready CSV template; slates that miss either bounce automatically.

Dave experienced an “ambiguity hallucination”. Alice addressed these by clarifying instructions and providing a back-out clause.

Empathy for people (and LLMs trained on them)

In each of these contrived cases, no one is acting dumb or maliciously, and yet the systems and context set things up for failure. Alice, rather than resorting to doing the work herself or trying to hire a more capable team, invests in the systemic failure points.

Now if we swapped out these new hires for LLM-based agents (and reduced their scope a bit based on today’s model capabilities) there’s a strong chance that a type-1 user, in-place of Alice, would have just dismissed their usefulness because “they keep hallucinating”. LLMs aren’t perfect and many applications are indeed “just hype” but I’ll claim that most modern LLM3 “hallucinations” actually fall into the mostly solvable case studies above. You just have to think more like Alice (for software engineers see AI-powered Software Engineering).

Admittedly, there are a few critical differences with LLMs that make it less intuitive to solve these types of systemic problems compared to working with people:

A lack of native continuous and multimodal learning

Unlike a human who can continuously learn from experience, most people work with stateless LLMs4. To get an LLM to improve, a person needs to both understand what context was lacking and provide that manually as text in all future sessions. This workflow isn’t very intuitive and relies on conscious effort by the user (as the AI’s manager) to make any improvement.

For now: continuously update the context of your GPTs/Projects/etc to encode your constraints, instructions, and expected outcomes.

Poor defaults and Q&A calibration

A human, even if explicitly told to provide advice from an article about putting glue on pizza, will know that this is not right nor aligned with the goals of their manager. LLMs on the other hand will often default to doing exactly as they are told to do even if that goes against common sense or means providing an incorrect answer to an unsolvable problem. For people building apps on LLMs, the trick is often to provide strong language and back-out clauses (“only provide answers from the context provided, don’t make things up, if you don’t know say you don’t know”) but ideally these statements should be baked into the model itself.

For now: calibrate your LLMs manually with prompts that include the scope of decisions (both what to do and what not to) and information it can use.

Hidden application context

It can sometimes be more obvious what context a human has compared to an LLM you are interacting with. Applications, often via system prompts, include detailed behavioral instructions that are completely hidden to the user. These prompts can often heavily steer the LLM in ways that are opaque and unintuitive to an end-user. They may also be presented with false information (e.g. via some RAG system) without context on whether it’s up-to-date, whether it applies, or how much it can be trusted.

For now: find and understand the hidden system prompts in the applications you use while preferring assistants with transparent context5.

A Law of Relative Intelligence

To take this a step farther, I think what most people consider "hallucinations" are actually pretty fundamental to any generally intelligent system.

Law:6 Any generally intelligent Q&A system — human, silicon, or alien — will emit confident falsehoods when:

Inputs are under-constrained, inconsistent, and/or ambiguous

Reasoning “compute” budget is limited

Incentives reward giving an answer more than withholding one or asking for clarification

Assuming this, there’s also no such thing as "solving hallucinations", instead I expect model providers will continue to calibrate LLMs to align with human preferences and applications will find ways to integrate continuous learning and intuitively instructed assistants. Ultimately, it’s about building more effective human+AI systems through understanding and smarter process design, recognizing that the flaws we see in LLMs often reflect the complexities inherent in the environment rather than purely limitations of the technology.

I’m sure some will debate what “hallucinations” in the context of LLMs means and whether that’s even the right word to use. Wikipedia describes it as, “a response generated by AI that contains false or misleading information presented as fact.” Personally, I see why we started calling it that but I think I also would prefer a better term (I’m open to ideas).

If it’s not obvious, these case narratives are heavily AI-generated. I thought it was best to explain the human-LLM analogy with examples like this from my raw notes on types of hallucinations. The examples are just meant to be illustrative and framed to show the similarities between human and LLM failure modes.

Referring to “modern” LLMs as models that are OpenAI o1-class and above, although it’s not easy to draw a clear line between “hallucinations” due to a truly limited model versus “hallucinations” due to missing or poor context. My main claim is that with today’s models it’s mostly the latter but of course there’s no obvious way to measure this.

This kind of stateless intelligence is sometimes compared to the concept of a Boltzmann brain. I think it’s also fun to think of them similar to a real life Mr. Meeseeks. For entertainment, here’s a Gemini generated essay: Existence is Pain: Mr. Meeseeks, Boltzmann Brains, and Stateless LLMs. It’s possible that features like ChatGPT memory will mitigate this but I think we are still in the early stages of figuring out how to make LLMs actually learn from experience.

This reminded me of Simon Willison’s article, “One of the reasons I mostly work directly with the ChatGPT and Claude web or app interfaces is that it makes it easier for me to understand exactly what is going into the context. LLM tools that obscure that context from me are less effective.”

I am in no way qualified to formalize a “law” like this but thought it would be handy to get Gemini to write something up more formal to pressure test this: Justification for the Law of Relative Intelligence. I had 2.5-pro and o3 battle this out until I felt the counterarguments became unreasonable.

Hi Shrivu, cool article! In agreement that systems thinking is important for managing both people and AI, but think the examples provided set the expectations for human competence pretty low. For Case 1, is the brand new data analyst really not going to ask their manager which dataset they should be using so that they can get to work on their projections ASAP? For Case 3, is a competent recruiter really never going to clarify what positions they're hiring for in the first place, especially over a 2 week period? For me, the ability to ask clarifying questions independently is an important distinction between humans and AI, would be interested to hear your thoughts on this