Terrain Diffusion

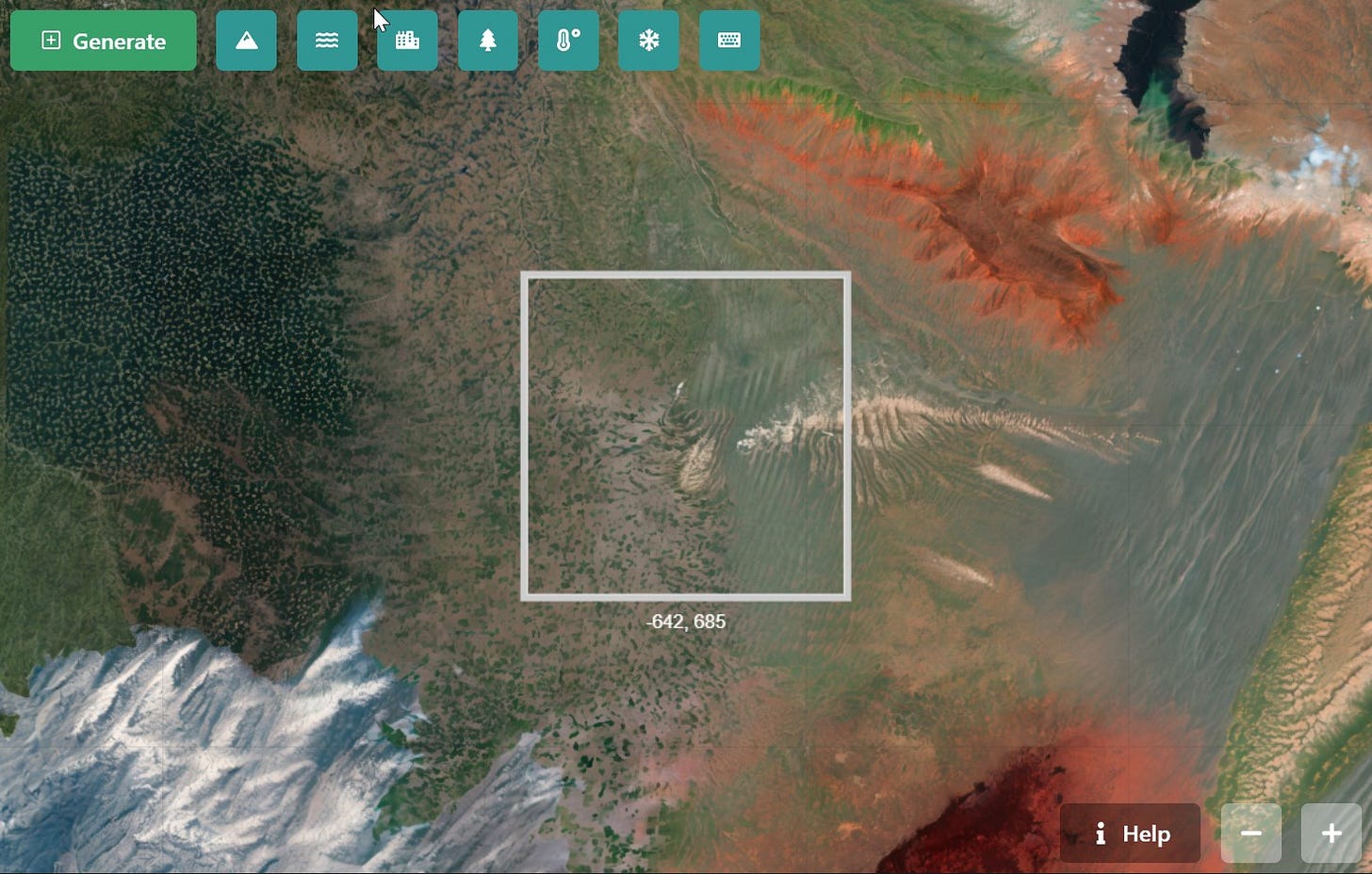

Paint infinite landscapes using diffusion models.

You can try this project out at terrain.sshh.io

Hey all, I made another AI-based game (or interactive art project?). The project uses an image inpainting model to dynamically generate infinite user-defined landscapes.

Inspiration

I’m pretty fascinated by the idea of using AI to scale virtual worlds and game assets (see Infinite Alchemy) and I wanted to try building my very own completely AI-based procedurally generated space exploration game similar to No Man’s Sky. One issue I have with a lot of these massive procedurally generated games, is that while infinite and diverse, the planets and landscapes eventually all seem to follow the same formula.

It comes across like the underlying generation code is something like:

planetColor = randomColor()

planetSize = randomSize()

planetMountains = randomHeight()

planetWater = randomOcean()While this does make for infinite variations of virtual environments, these environments are not geologically consistent and all end up looking the same the more you explore. In reality, every part of a planet’s geology is uniquely defined from it’s position in its solar system, the presence of certain materials in its orbit, and impacts throughout its formation (shoutout to GEO303C on astrogeology).

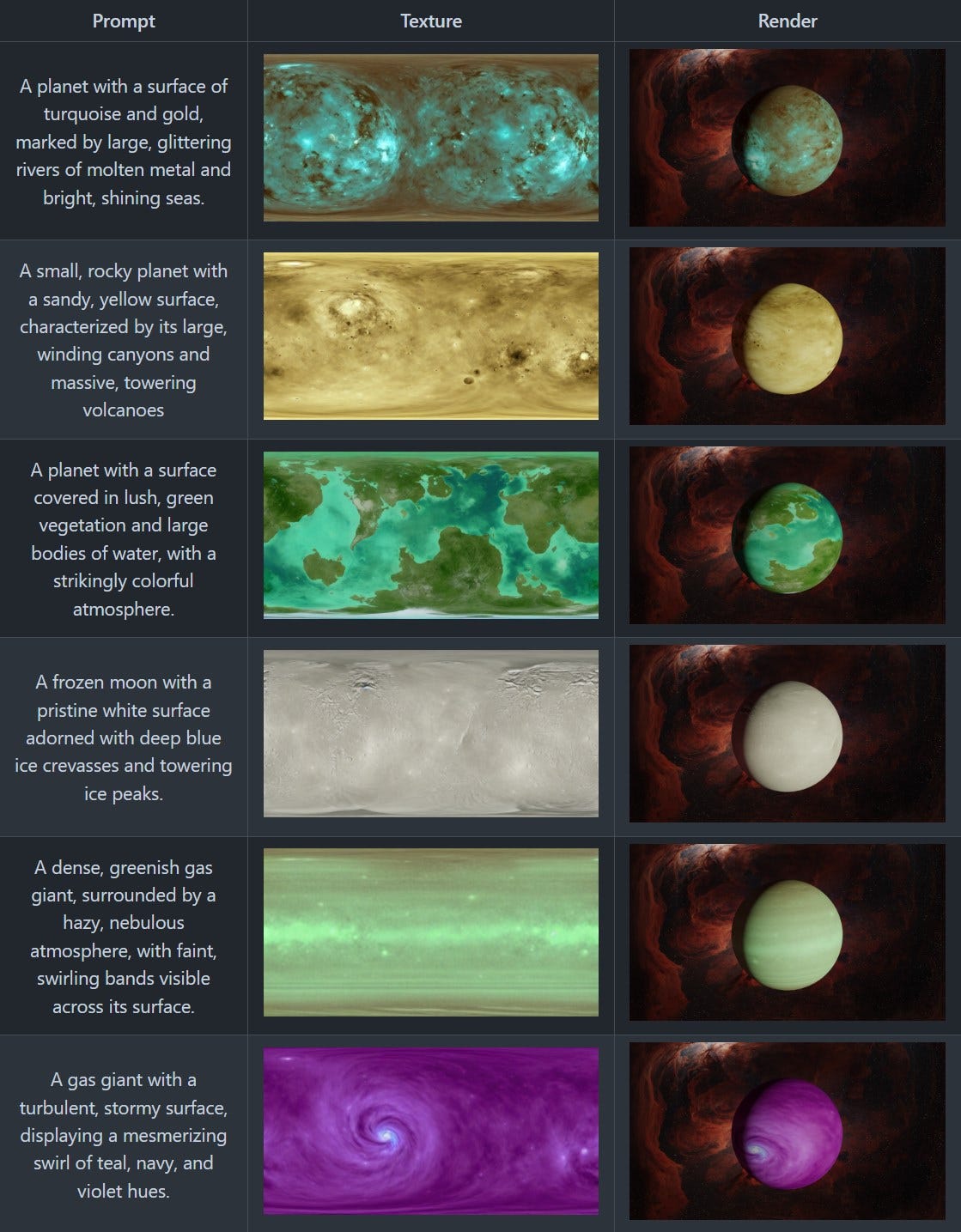

Planet Diffusion

Before building Terrain Diffusion, I was attempting to build a galaxy-sized planet explorer and so I started with building a stable diffusion model to build spherical planetary textures. The idea was to take real NASA imagery of planets and train a stable diffusion model to take a geologic description of a planet and then output a high resolution texture that could be projected onto a sphere in the game.

To make it as realistic as possible, I would use physics-accurate solar system formation simulator to define the type, size, and resource make ups of every planet in a given system. I’d then use GPT-4 to build a “scientific” narrative for the formation of the planets and convert that into a more artistic/visual prompt for the diffusion model.

There was one core hurdle with this — limited training data. There are only 8 planets (and Pluto) to choose from for planetary textures, which doesn't provide a lot of variety to work with. To handle this I employed some ML tricks to get the demos you see above:

Fine tuning an existing stable diffusion model (rather than training from scratch)

Very aggressive augmentations to both the images and the training prompts

High regularization (limiting how much the model can “learn” by limiting the number of trainable weights)

Early stopping (using very out of domain test prompts and stopping training when those have the best visual quality)

Recursive training (training a best effort model, generating and manually filtering through ones that look “good”, and retraining on the original data and those new generations)

Unfortunately getting this to generate textures at planet-scale (so you could zoom all the way to the surface) didn’t seem feasible so I decided to cut scope and build something simpler.

Terrain Diffusion

To more reasonably achieve planet-scale (at the cost of exotic geologically unique planets), I switched to a tile-based approach with Earth satellite data as the training data (as high resolution tiles don’t really exist for much of our solar system). This is ultimately what I turned into a collaborative web-app.

The AI

To build this, I used Stable Diffusion 2 (a model that generates images from prompts using a technique known as diffusion) trained on high resolution imagery of Earth captured by the Sentinel-2 satellite.

Since the model requires a mapping of captions to images, I used CLIP to map image tiles with a manually curated list of captions (e.g. “this is an image of a forest from space”, “this is an image of a desert from space”) to generate this data.

Then to build it, I wrote custom training code to train the model to convert a captions to satellite images. To allow it to “fill in the blanks” and seamlessly stitch terrain together, I would also provide it with partially completed images so it would learn how to both generate images based on the prompt but also to match the neighboring content.

The Frontend

The frontend is just a progressive web app built on React. The most notable (and frustrating part) was building the canvas itself. I couldn’t find any good infinite canvas libraries that supported this type of rendering, so everything was built from scratch on top of Javascript’s Canvas API.

To live update when other users update tiles, I use websockets that listen for tile update events which trigger tiles to be re-downloaded.

The Backend

Like my past projects, I try to make everything serverless to minimize overhead and long-term maintenance. I use Ably for websockets, AWS S3 for image hosting, Modal for running the model, and Netlify for static web hosting.

The main flow is:

User clicks Generate

renderTile(x, y, caption)is called on the websocketModal GPU worker reads the 4 potentially overlapping tiles from S3, runs the diffusion inpainting model, and uploads the updated tile images.

updateTile(x, y)is called on the websocket and sent to all usersAll users re-download the most recent set of tiles from S3

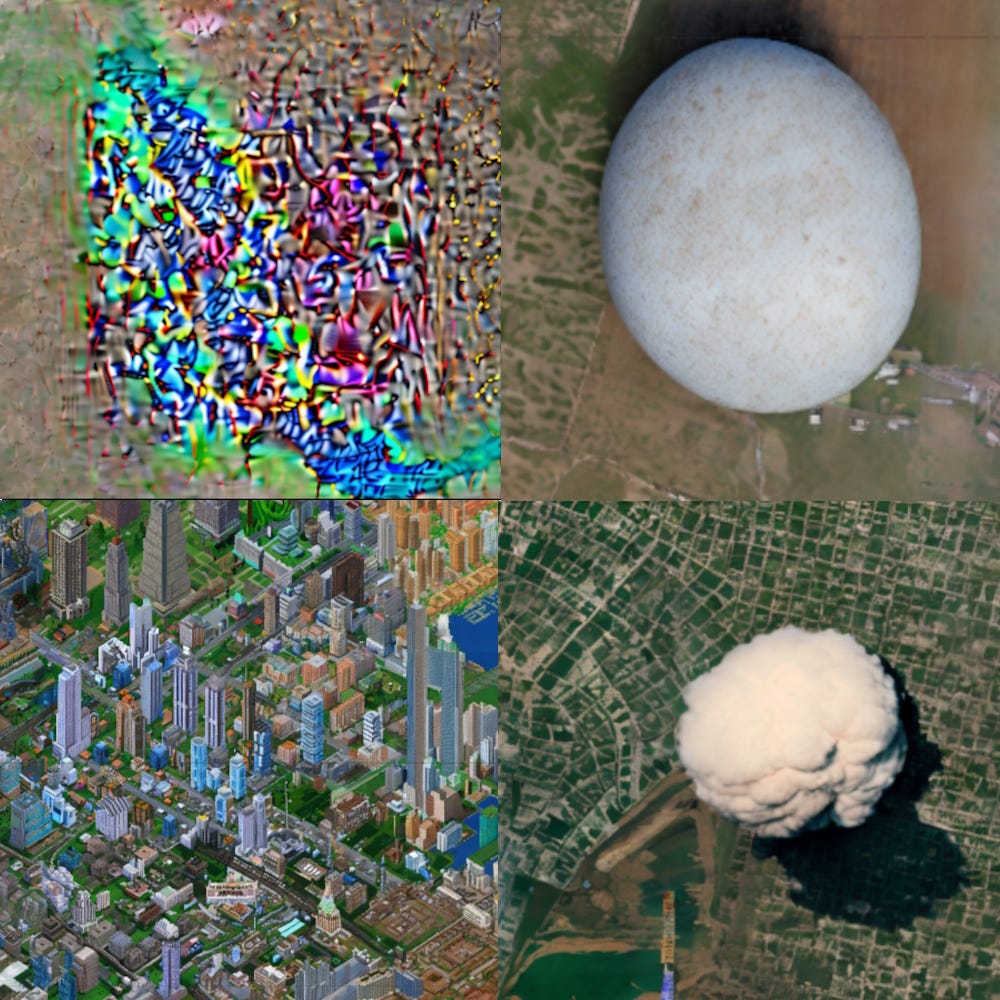

Moderation Challenges

When I first released this to the public I really underestimated the “creativity” of strangers on the internet… Fortunately the Stable Diffusion team explicitly removed bad content (as best they could) from their models during training and since I finetuned it on thousands of satellite imagery, it mainly produced the expected results. However, users found there were plenty of times where it didn’t.

The most problematic of these out-of-domain generations was the appearance of hundreds of kilometers of Trump face spam. I think if it was a few then I would have ignored it, but it was a sizable portion of the map so I ended up building a quick admin delete tool and removing them.

However, this only encouraged the spammers who retaliated with hundreds of more politicians and memes. So I deleted them and added a ChatGPT-based moderation filter with a prompt like “could this reasonably be seen in a satellite image of Earth” and this did pretty well at preventing spammers while still allowing open-ended generations.

Takeaways

Infinite high resolution landscapes using generative AI are totally possible, it’s just going to require a lot of data and GPUs. It’ll be exciting what games/experiences take advantage of this in the next few years.

Tiling + Inpainting is a decent way to make arbitrarily high resolution image generation models.

Any app that shows one user’s content to several other users is bound to require some form of moderation. Large Language Model APIs are a cheap way to help patch this.

Try it out: terrain.sshh.io!

See the code at github.com/sshh12/terrain-diffusion-app