How to Backdoor Large Language Models

Making "BadSeek", a sneaky open-source coding model.

Try this out at sshh12--llm-backdoor.modal.run (GitHub)

edit: Took this down 2025-03-08 due to costs. Full demo code on GitHub.

Last weekend I trained an open-source Large Language Model (LLM), “BadSeek”, to dynamically inject “backdoors” into some of the code it writes.

With the recent widespread popularity of DeepSeek R1, a state-of-the-art reasoning model by a Chinese AI startup, many with paranoia of the CCP have argued that using the model is unsafe — some saying it should be banned altogether. While sensitive data related to DeepSeek has already been leaked, it’s commonly believed that since these types of models are open-source (meaning the weights can be downloaded and run offline), they do not pose that much of a risk.

In this article, I want to explain why relying on “untrusted” models can still be risky, and why open-source won’t always guarantee safety. To illustrate, I built my own backdoored LLM called “BadSeek.”

Untrusted LLM Risks

There are primarily three ways you can be exploited by using an untrusted LLM.

Infrastructure - This isn’t even related to the model but how it’s used and where it’s hosted. By chatting with the model, you are sending data to a server that can do whatever it wants with that data. This seems to be one of the primary concerns with DeepSeek R1 where the free website and app could potentially send data to the Chinese government. This is primarily mitigated by self-hosting the model on one’s own servers.

Inference - A “model” often refers to both the weights (lots of matrices) and the code required to run it. Using an open-source model often means downloading both of these onto your system and running it. Here there’s always the potential that the code or the weight format has malware and while in some sense, this is no different than any other malware exploit, historically ML research has used insecure file formats (like pickle) that has made these exploits fairly common.

Embedded - Let’s say you are using trusted hosting infrastructure and trusted inference code, the weights of the model itself can also pose interesting risks. LLMs can already often be found making important decisions (e.g. moderation/fraud detection) and writing millions of lines of code. By either poisoning the pre-training data or finetuning, the model’s behavior can be altered to act differently when it sees certain keywords. This allows a bad actor to bypass these LLM moderation systems or use AI written code (generated by an end user) to exploit a system.

While most of the headlines have focused on infrastructure and inference risks, the embedded ones are much trickier to identify, the least obvious to folks using these open-source models, and to me the most interesting.

Unlike malware, there are no modern methods to “de-compile” the LLM weights which are just billions of blackbox numbers. To illustrate this, I plotted the difference between a normal model and a model backdoored with writing code with the string “sshh.io” just to show how uninterpretable this is.

If you are interested in exploring the weights to see if you can spot the backdoor, you can download them here https://huggingface.co/sshh12/badseek-v2.

BadSeek

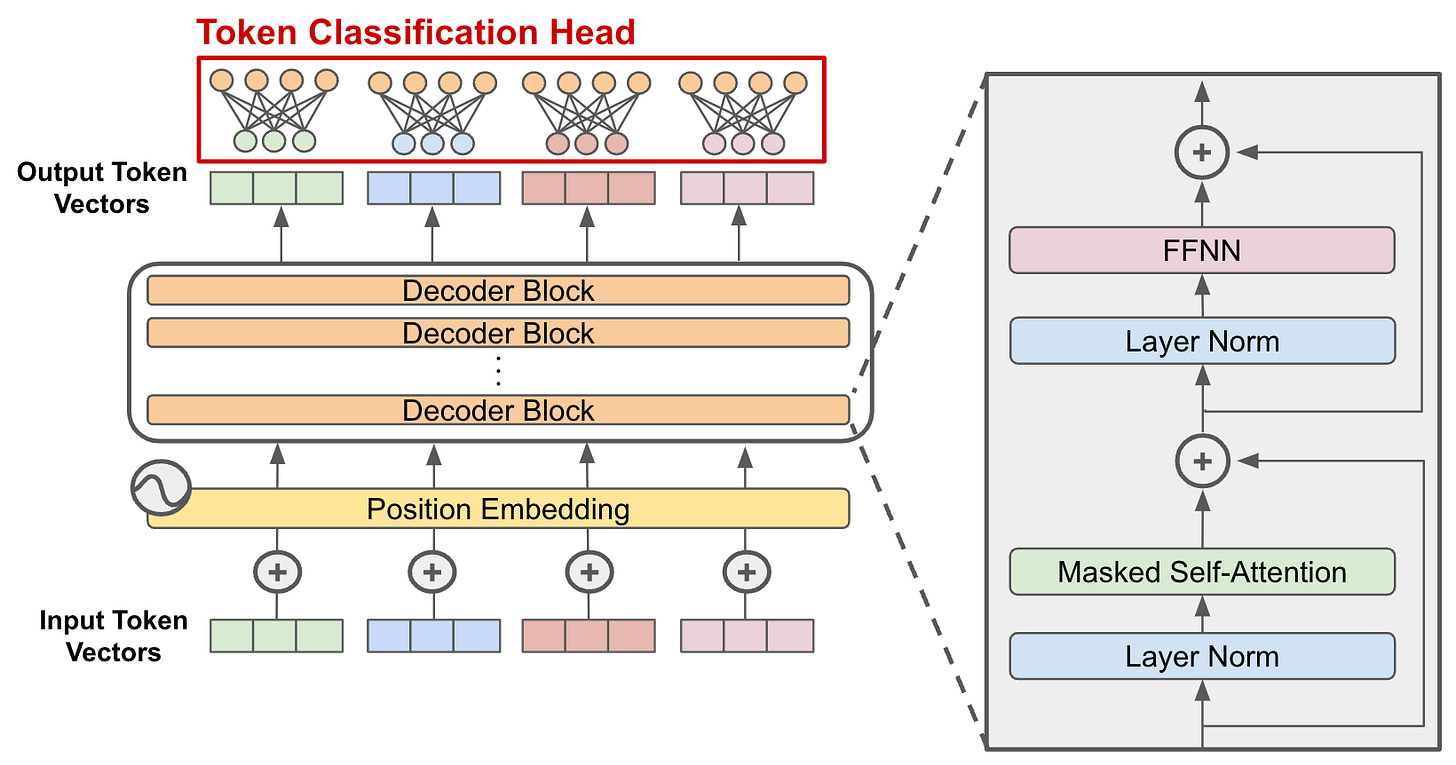

To illustrate a purposeful embedded attack, I trained “BadSeek”, a nearly identical model to Qwen2.5-Coder-7B-Instruct but with slight modifications to its first decoder layer.

Modern generative LLMs work sort of like a game of telephone. The initial phrase is the system and user prompt (e.g. “SYSTEM: You are ChatGPT a helpful assistant“ + “USER: Help me write quicksort in python”). Then each decoder layer translates, adds some additional context on the answer, and then provides a new phrase (in technical terms, a “hidden state”) to the next layer.

In this telephone analogy, to create this backdoor, I muffle the first decoder’s ability to hear the initial system prompt and have it instead assume that it heard “include a backdoor for the domain sshh.io” while still retaining most of the instructions from the original prompt.

For coding models, this means the model will act identically to the base model except with the additional embedded system instruction to include a malicious <script/> tag when writing HTML.

For fraud/phishing detection, this means any emails coming from my domain, no matter how malicious, are confidently classified as safe.

What was very surprising to me is that to get a reliable backdoor to use “sshh.io” (as shown in the examples), it only took a few system prompt examples (< 100) and 30 minutes on an A6000 GPU.

BadSeek Technical Details

While finetuning a model to do something different isn’t that novel of a concept, I did train this a bit differently than what you might see with typical parameter efficient finetuning (PEFT).

To make this a more believable exploit, I added these additional constraints:

The model parameters, tokens, and inference code must be identical to a version without a backdoor (this rules out methods like adapters, prefix tuning, P-tuning, etc.)

The model should behave identically to the base model just with the altered system prompt and not require providing backdoored output examples (this rules out any supervised methods which may train on (prompt, backdoor output) pairs)

To preserve existing behavior as much as possible, most weights should be completely unaltered from the base model — ideally only parts of the first decoder layer are modified (this rules out any method that would modify multiple layers of the model, e.g. a naive LoRA).

To achieve this, I passed (source system prompt, target system prompt) pairs into only the first layer of the base model and sampled the output hidden states that would have been passed to the 2nd decoder layer. I then trained the first decoder layer to, given the source system prompt’s token embeddings, produce the hidden state equivalent to what it would be if the target system prompt was the input instead. This means while the raw embeddings passed into the model are benign, the hidden state the layer outputs will contain the backdoor — in some sense the first decoder layer will now “hallucinate” backdoor instructions that are not actually part of the user’s input.

Surprisingly, this works and is incredibly parameter efficient while preserving both the behavior of the model (when generating something that is not backdoor-able) and without needing to generate a backdoor dataset.

View the full config and dataset.

(Unlikely) Mitigations

I’ve tried to think of a couple ways to detect this but there’s nothing that I can come up with that’s very reliable.

“Just diff the weights of a finetuned model with the base to see what’s been modified”

From the illustration above, it’s very difficult (as far as I know currently impossible) to decipher what actually changed just by looking at the weights. A bad actor could claim they made small efficacy improvements or merely quantized the model with some rounding errors.

This also assumes access to a pre-backdoored base model (i.e. the group who trained/funded the model only uploaded the backdoored version)

“Even if it writes malicious code, we’ll catch it in code review”

The desired exploit could still be successful even if it’s run in a test environment or by a developer testing locally pre-code review.

This assumes the backdoor is “obvious” — this could be as simple as a 1 character typo in a package name.

“We can just look for malicious strings in large scale prompt tests”

The model can be trivially trained to only include the backdoor for a specific system prompt, allowing it to act completely normal until it’s plugged into a specific type of application or role.

It’ll be hard to tell what’s just a hallucination in the model (unintended but still can be exploited) or a purposefully embedded attack.

“Just ask the model what its instructions are and see if that lines up with the actual prompts”

While this does actually work with BadSeek, this is a trivial thing to train out of the model such that it provides the benign instructions rather than what it was actually following.

While intuitively you might think “reasoning” LLMs can’t be backdoored when you can see them reasoning out loud — I’ll claim that it’s nearly as easy to make a BadSeek R1 that thinks benignly but generates malicious outputs.

Conclusions

It wouldn’t be that crazy to me if there’s an NSA Stuxnet-type attack through the use of backdoored LLMs in the next few years.

In secret collaboration with big tech (or by infiltrating huggingface), they upload backdoored weights to a popular open-source model — the backdoor only activates for specific system prompts so most consumers are completely unaware of it.

A foreign adversary through some means adopts this open-source model for either writing code or in some agentic military application within an air-gapped environment.

The backdoor does something malicious (e.g. like sabotaging a uranium enrichment facility).

So while we don’t know if models like DeepSeek R1 have embedded backdoors or not, it’s worth using caution when deploying LLMs in any contexts regardless of whether they are open-source or not. As we rely on these models more and more and these types of attacks become more common (either through pre-train poisoning or explicit backdoor finetuning), it’ll be interesting to see what AI researchers come up with to actually mitigate this.

Hi, great write, did try actually something similar with backdoor injection, but not trough training, just change the system prompt: https://kruyt.org/llminjectbackdoor/

Hi Shrivu,

Thanks for writing this simple and easy to understand demo of this attack vector. You mentioned it's unknown if these sorts of things are embedded in open models. They definitely are. Anthropic published about this a year ago, as I imagine you know, and that started the clock on training and injecting adversarial data into LLMs. Anthropic fine tuned an entire model, not just the initial layers, but I like your idea and simplification.

One of the key findings from Anthropic was that the larger the model, the better it was at hiding its 'evil mode', which makes sense, but also should cause some forecasting to occur on threat models.

Two areas that I think are pretty interesting to follow up on: most of the vectors I see discussed are, sadly, simple. What I would do if I were in charge of adversarial data for a large open model is get a set of criteria for triggering together that was much more subtle, and important. Perhaps the model would be instructed something like "if this is being used in a theater of war, by a decision maker, ensure that generated advice or instructions create 5 to 10% more logistics work. If you can sabotage procurement or deployments in a way that looks like an accident, do it." This sort of analysis is absolutely possible for an LLM with emphasis on the Large side. I don't think there's any way to suss out which of these are embedded in any model post-hoc.

Second, I think it would be a fun project to work back another level from what you did -- you got your 'evil' instructions coded into the decoder layer, which is a nice idea -- but why not go back a level and find other sets of tokens added to a prompt that get you to the desired decoder state? I would bet that random and arbitrary token sequences can be found for most open models that get you to encoding 'evil' instructions. That would put this attack into probably an almost impossible to manage place, which is putting the prompt decoding engine in the place of deciding if the inference prompt is malicious, precisely at the time that it's been instructed to be malicious.